Why is Data Analytics Infrastructure Important?

by Hannah Barrett, on July 22, 2021

What is Data Infrastructure?

Simply put, data analytics infrastructure refers to the configuration of physical and virtual servers that are put in place to store and manage your data. There are many different data management systems and databases that organizations have to choose from depending on their needs.

What are The Data Infrastructure Options?

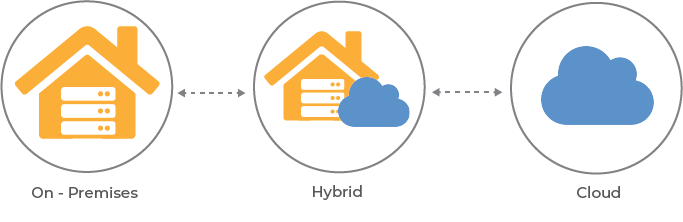

The common deployment options you may be familiar with today are on-premise, cloud, and hybrid. Each provides varying benefits and has potential drawbacks, but the business and banking world as we know it is moving further to cloud-native server infrastructure to host their data and other workloads.

The Challenges of Poor Data Infrastructure

Without the proper infrastructure in place, a lot could go wrong in your day-to-day. With an on-premise solution, you may end up with more unplanned downtime than originally thought when a hard drive needs to be replaced or a patch upgrade goes south. Redundancy is king, but it can also become expensive as your data volumes grow over time.

Additionally, if your data remains siloed rather than consolidated into a data warehouse, then your analyst may spend a large portion of their time manually blending data, reducing their overall efficiency. That's why it is very important to make sure you have the proper data infrastructure to meet your primary business goals.

The Benefits of Strong Data Infrastructure

A strong data analytics infrastructure strategy ensures enhanced efficiency and productivity, makes collaboration much easier, and it allows you to easily access your information from anywhere provided you have the proper authentication steps in place.

Some benefits of a proper data management system are:

- Reduced operational costs

- More focus on your core competency

- Higher efficiency through process analysis & improvement

Example: Arkatechture's Data Infrastructure

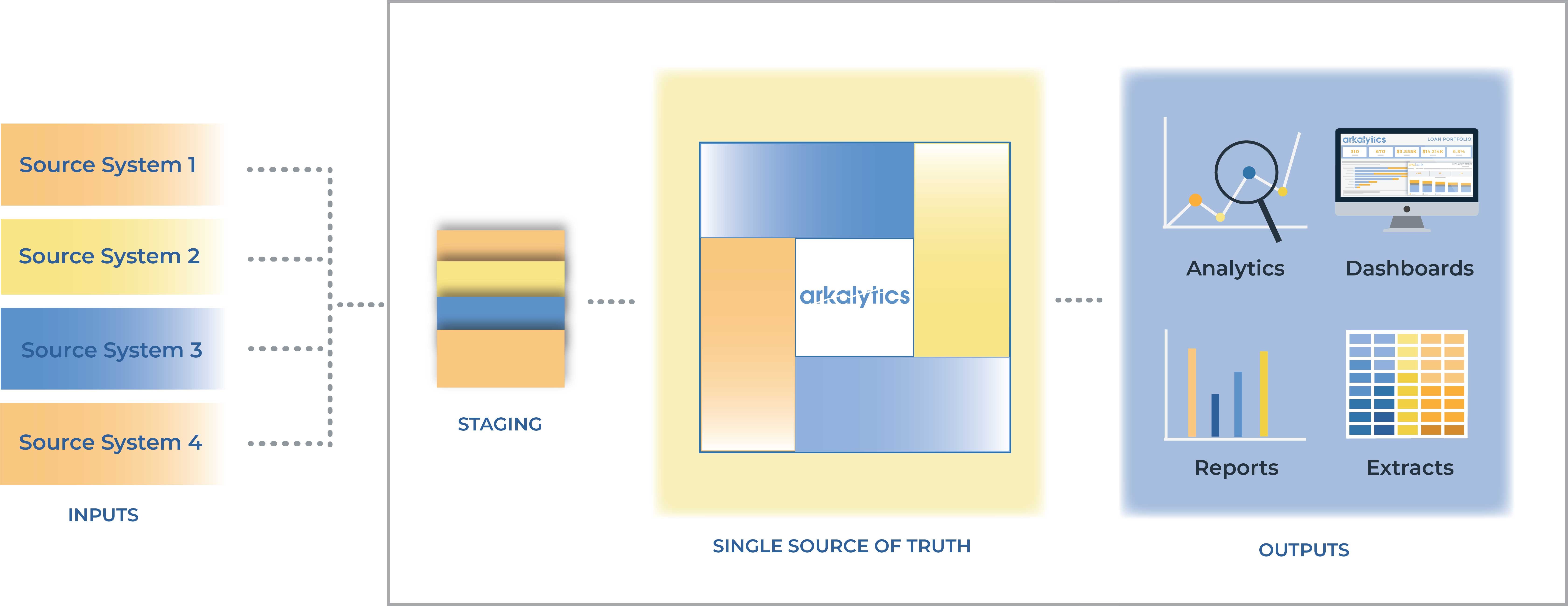

At Arkatechture, we work with a variety of cloud providers such as Amazon Web Services (AWS), Microsoft Azure, and Google Cloud. Everything is built with security and scalability as priorities. Data is ingested from multiple source systems into our lean data pipeline within the server infrastructure, which is a collection of about 40 containers that are used to ingest all different types of data; and this is just the staging phase.

The data pipeline is a vital service that we provide which must be completed before any other step, and in some cases, a client will only require assistance with this step, while they handle building dashboards themselves.

In this pipeline example, data is extracted, loaded into the database, and then transformed for use in data analytics, business intelligence, predictive modeling, and machine learning. From there, your analysts will be able to leverage trusted data sets for in-depth analysis and decision-making.

If you would like to learn more about implementing the right data infrastructure for your organization: